Part 5 of Machine Learning is Fun: Using Deep Learning for Language Translation and the Enchantment of Sequences

Update: There are several articles in this series. See the entire series: Parts 1, 2, 3, 4, 5, 6, 7, and 8 are highly recommended! This page is also available to read in Italiano, ό, Tiếng Việt, О通话, and Руcскиѹ.

Huge update: these articles served as the basis for my new book! It has a ton of fresh content, updates all of my articles, and offers a ton of practical coding assignments. Look it over right now!

Everyone is familiar with and fond of Google Translate, the service that appears to work like magic and can translate between 100 different human languages quickly. Even our phones and smartwatches can access it:

Machine Translation is the technology that powers Google Translate. People are now able to converse when it would not have been feasible otherwise, and this has revolutionized the globe.

However, as everyone knows, for the past fifteen years, high school students have been using Google Translate to help them with their Spanish homework. This is ancient news, right?

As it happens, deep learning has completely changed how we approach machine translation in the last two years. The world's top expert-built language translation systems are being beaten by very simple machine learning solutions created by deep learning researchers who have virtually no experience with language translation.

This innovative technology is known as sequence-to-sequence learning. It's an extremely effective method that may be applied to a wide range of issues. We'll study how the exact same algorithm may be used to create AI chatbots and describe images after seeing how it is used for translation.

Now let's move!

Creating Translations with Computers

So how can we teach a machine to interpret spoken language?

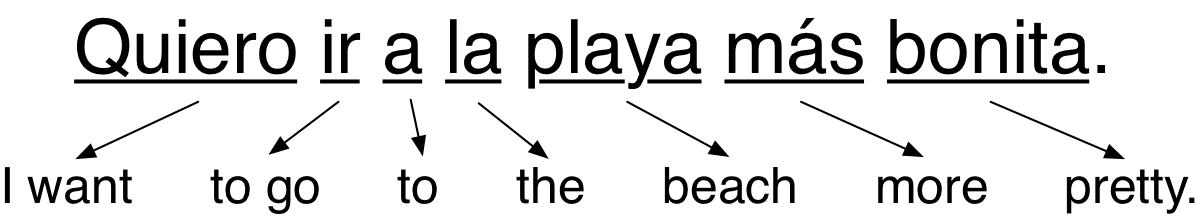

The easiest method is to use the translated word from the target language in lieu of each word in a phrase. Here is a basic illustration of word-for-word translation from Spanish to English:

This is simple to put into practice because all you need to look up the translation of each word is in a dictionary. However, because grammar and context are ignored, the outcomes are poor.

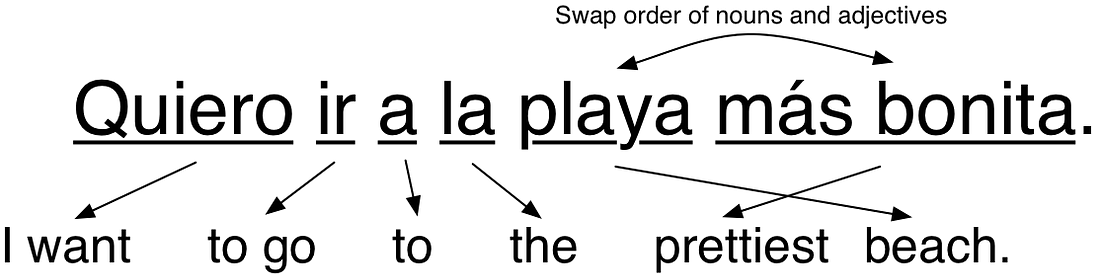

To improve the outcomes, you might next start implementing language-specific rules. Common two-word sentences, for instance, could be translated as a single group. Additionally, as nouns and adjectives typically appear in Spanish in the opposite order from how they appear in English, you might want to switch around the order:

That was successful! Our program ought to be able to translate any sentence if we just keep adding rules until we can handle every aspect of grammar, isn't that right?

The initial machine translation systems operated in this manner. Linguists developed intricate rules and individually programmed each one. During the Cold War, some of the world's most brilliant linguists spent years developing translation systems to make it easier to understand Russian communications.

Sadly, this was limited to publications with a straightforward structure, such as weather reports. With documents from the actual world, it wasn't dependable.

The issue is that there is no defined rule for human language. Human languages are rife with exceptions, regional differences, and outright rule-breaking. The English language has been shaped more by the people who invaded each other hundreds of years ago than by someone sitting down and making up rules for grammar.

Using Statistics to Improve Computer Translation

Following the failure of rule-based systems, models based not on grammatical rules but on probability and statistics were used to build alternative translation techniques.

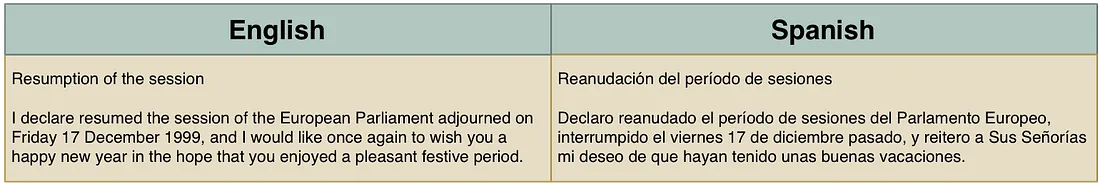

A large amount of training data—text translated into at least two languages—is needed to build a statistics-based translation system. Parallel corpora are these texts that have been translated twice. Similar to how scholars deciphered Egyptian hieroglyphs from Greek in the 1800s using the Rosetta Stone, computers may leverage parallel corpora to make educated guesses about how to translate text between languages.

Fortunately, there is already a ton of double-translated material in odd locations. The European Parliament, for instance, translates its sessions into twenty-one languages. In order to aid in the development of translation systems, researchers frequently employ that data.

Using Probability to Think

Statistical translation methods vary fundamentally in that they do not attempt to produce a single, accurate translation. Rather, they produce hundreds of translation possibilities and then order them according to how likely it is that each translation is accurate. Based on how closely something resembles the training set, they can determine how "correct" it is. This is how it operates:

Step1: Divide the first sentence into smaller parts

Initially, we divide our sentence into short, clearly comprehensible segments:

Step2: Look up every translation that could be done for each chunk

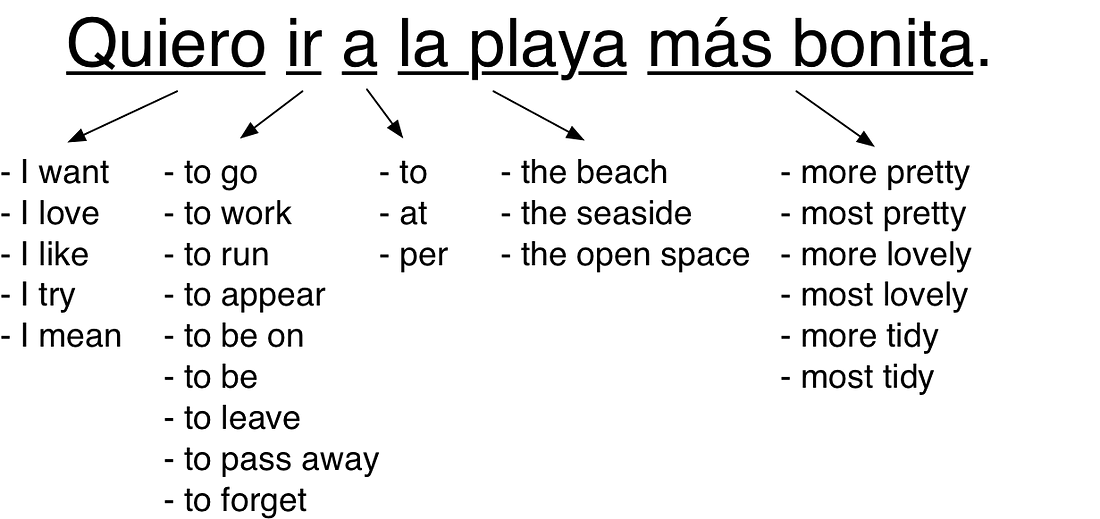

Next, using our training data, we will translate each of these chunks by identifying all the ways that people have translated those same word chunks.

It's crucial to remember that we are doing more than simply searching these passages in a translation dictionary. Rather, we are witnessing how real people turned these same word fragments into statements from everyday life. This aids in capturing the variety of applications they can have in various settings:

A greater number of these translations are employed than a lesser number. We can assign a score to each translation based on how often it occurs in our training data.

For instance, saying "Quiero" to indicate "I want" is far more prevalent than saying "I try." In order to give one translation more weight than another, we can use the frequency with which "Quiero" was translated to "I want" in our training data.

Step 3: Come up with every potential sentence and select the most plausible one.

Next, we'll create as many different words as we can by combining these parts in every way imaginable.

By mixing the chunks in different ways, we can already create approximately 2,500 alternative versions of our statement only from the chunk translations we listed in Step 2. Here are a few instances:

- I enjoy going to the coast where things are more organized

- To be on the most beautiful open place is what I mean.

- It's more pleasant for me to be by the sea.

- To go to the most tidiest open place is what I mean.

However, because we'll also experiment with other word orders and sentence chunking techniques, there will be an even greater number of possible chunk combinations in a real-world system:

- I make an effort to run in the most beautiful open areas.

- The more orderly the open area, the more I want to run.

- I make an effort to visit the cleaner seaside.

- To forget at the cleanest beach, that is what I mean.

Now, you have to go over each of these produced sentences and select the one that sounds the "most human."

In order to accomplish this, we match each generated sentence to millions of authentic sentences from English-language literature and news articles. The more English-language literature we can obtain, the better.

Consider the following potential translation:

I make an effort to depart through the most beautiful open area.

This sentence is probably unique among all the sentences in our data collection because no one has ever written a sentence like it in English. We will assign a low probability score to this potential translation.

However, consider this potential translation:

I wish to visit the most beautiful beach.

This line will receive a high likelihood score because it resembles something in our training set.

We will select the sentence that most closely resembles genuine English sentences overall and has the highest likelihood of translating into chunks after attempting every conceivable combination.

The phrase "I want to go to the prettiest beach" would be our ultimate translation. Not too bad!

A Significant Advancement Was Statistical Machine Translation

If you provide statistical machine translation systems with enough training data, they outperform rule-based systems in many cases. In the early 2000s, Franz Josef Och built Google Translate by refining these concepts. The globe could now finally use machine translation.

Everyone was initially shocked to learn that the "dumb" method of translating, which relied on probability rather than linguists' rule-based methods, was more successful. This gave rise to a (very derogatory) proverb among scholars in the 1980s:

Statistical Machine Translation's Restrictions

Systems for statistical machine translation perform well, but they are difficult to create and manage. Experts must adjust and fine-tune a new multi-step translation pipeline for each new pair of languages you wish to translate.

Trade-offs must be made because building these many pipelines requires a great deal of labor. There aren't enough translations from Georgian to Telegu for Google to make a significant investment in that language pair, therefore if you ask it to translate Georgian to Telegu, it will first need to translate it internally into English. Additionally, if you had asked it to translate from French to English, which is the more popular option, it might have done so using a less sophisticated translation pipeline.

If we could get the computer to handle all that tedious development work for us, wouldn't that be awesome?

Improving Computer Translation — Without All Those Pricey Personnel

A black box system that can translate text on its own by merely observing training data is considered the holy grail of machine translation. The multi-step statistical models still require human construction and adjustment when using statistical machine translation.

KyungHyun Cho's group achieved a significant advancement in 2014. They developed this black box system by figuring out how to use deep learning. Without the need for human assistance, its deep learning model learns how to translate between those two languages by utilizing parallel corpora.

This is made possible by two key concepts: encodings and recurrent neural networks. We can create a self-learning translation system by deftly fusing these two concepts.

Neural Networks with Recurrent Architectures

Recurrent neural networks are something we covered in Part 2 earlier, but let's recap briefly.

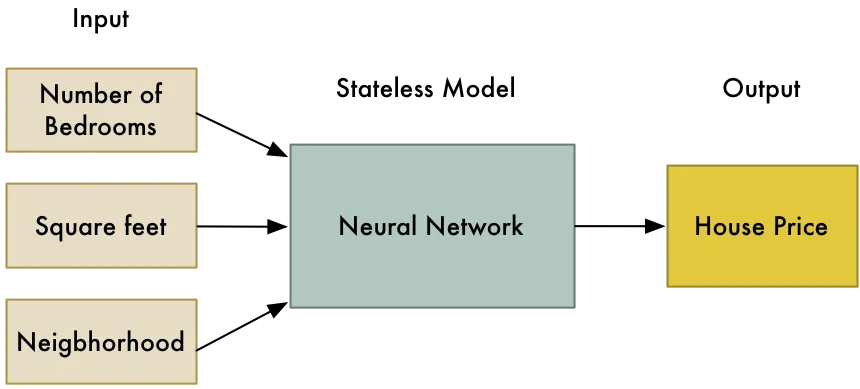

A generic machine learning technique that receives a list of numbers and computes a result (based on prior training) is a regular (non-recurrent) neural network. Numerous issues can be resolved by using neural networks as a black box. For instance, we can estimate a house's estimated value using a neural network based on its attributes:

Neural networks, however, lack state, just as the majority of machine learning methods. A list of numbers is inputted, and a result is computed by the neural network. It will always compute the same result if you enter those same values again. It doesn't remember previous computations. Stated otherwise, 2 + 2 is always equal to 4.

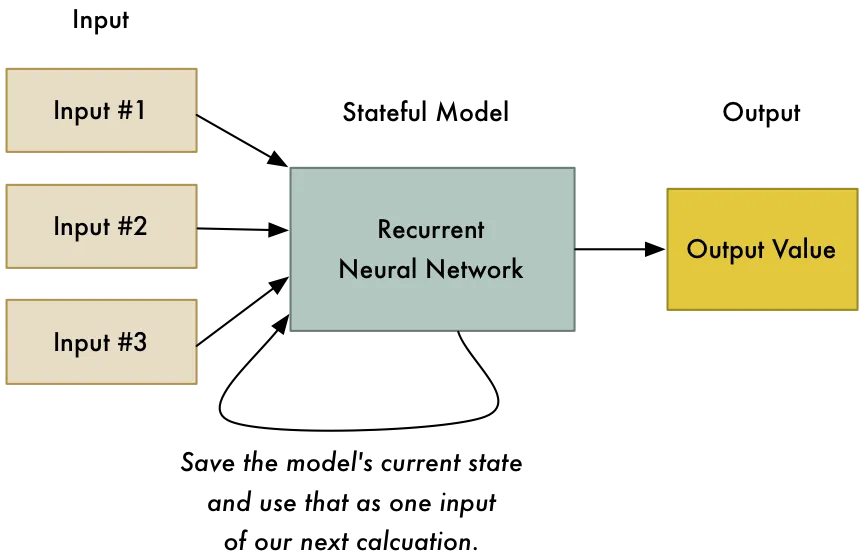

Recurrent neural networks, or RNNs for short, are slightly modified neural network models in which one of the inputs to the subsequent computation is the neural network's prior state. This implies that the outcomes of earlier computations affect those of later computations!

How could we possibly want to do this? Regardless of the last calculation we made, shouldn't 2 + 2 always equal 4?

Neural networks may identify patterns in a series of data by using this approach. It can be used, for instance, to determine, from the first few words of a sentence, what word is most likely to come next:

RNNs come in handy if you need to identify patterns in data. RNNs are becoming more and more common in many fields of natural language processing since human language is basically one large, complex pattern.

To gain further insight into RNNs, you may read Part 2, in which we generated a fake Ernest Hemingway book using one, and then created a false Super Mario Bros. level with another.

Codings

We also need to review the concept of encodings. In Part 4, we covered encodings as a component of face recognition. Let's take a brief digression on how we can use computers to distinguish between two humans in order to understand encodings.

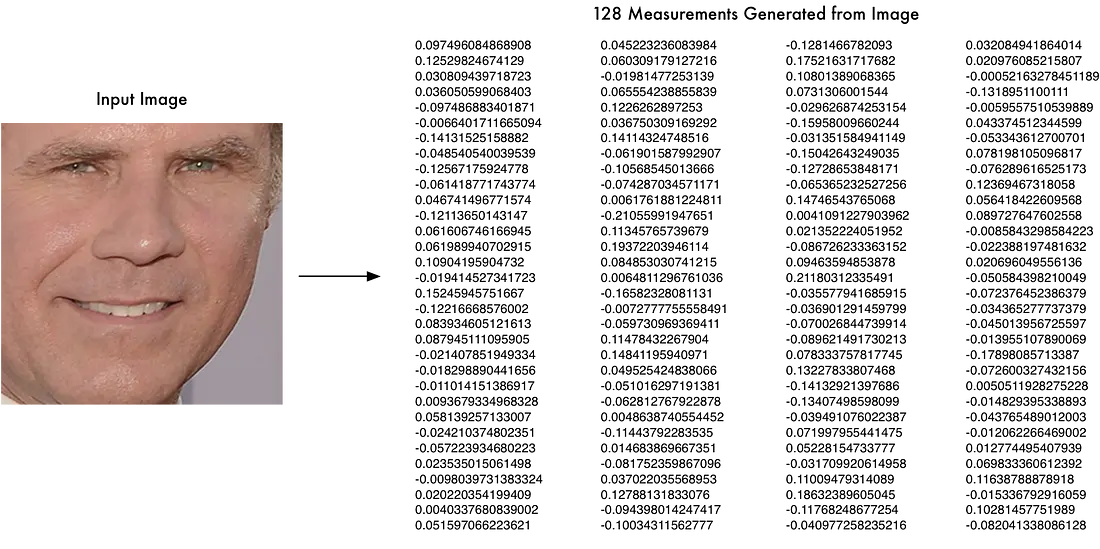

When using a computer to distinguish between two faces, you take several measures from each face and compare the results. To determine if two images depict the same individual, we may, for instance, measure the width between the eyes or the size of each ear.

This concept is probably already known to you if you've watched any primetime detective series like CSI:

One example of an encoding is the concept of converting a face into a series of measurements. We are converting a set of measurements that represent raw data—a picture of a face—into an encoded list.

However, as we saw in Part 4, we are not required to measure ourselves using a predetermined set of visual features. Alternatively, we can create measurements from a face using a neural network. The computer is more adept than humans in determining which metrics are most useful in distinguishing between two individuals who are similar to each other:

This is how we encoded it. It allows us to use something as basic as 128 digits to represent something as complex as a picture of a face. Because we no longer need to compare entire photos when comparing two different faces, it is now much simpler to compare just these 128 integers for each face.

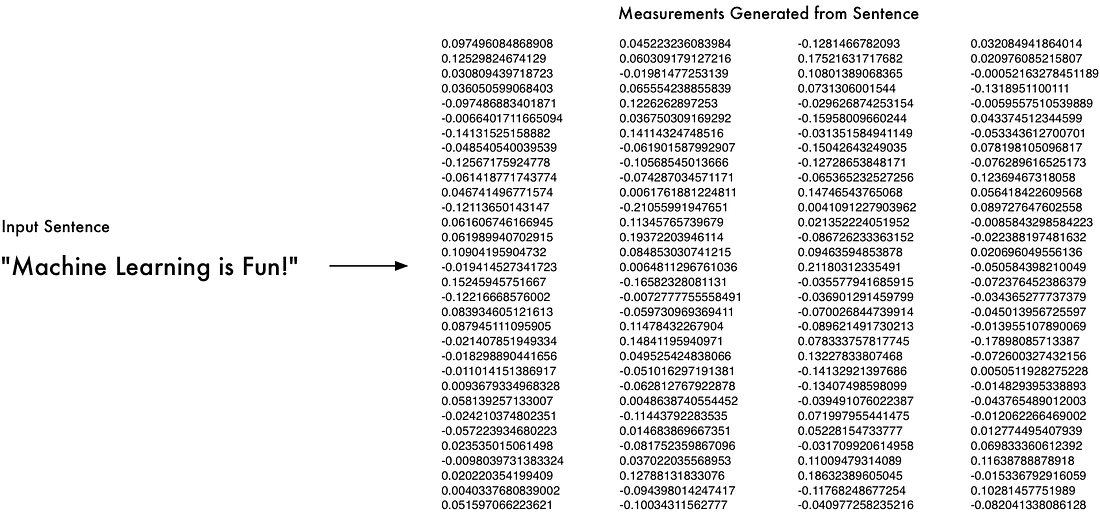

What do you think? With phrases, we can accomplish the same thing! We may devise an encoding that takes any conceivable statement and converts it into a set of distinct numbers:

We will feed the sentence into the RNN one word at a time in order to create this encoding. The values that comprise the full sentence will be the final output once the final word has been processed:

That's great to know that we can now express a whole sentence as a collection of distinct numbers! It doesn't really matter that we don't know the meaning of every number in the encoding. We don't need to know the precise method used to create the numbers as long as each sentence can be uniquely identifiable by its own set of numbers.

Come on, let's translate!

Alright, so now we know how to convert a sentence into a group of distinct numbers using an RNN. In what way does that benefit us? This is when things truly get interesting!

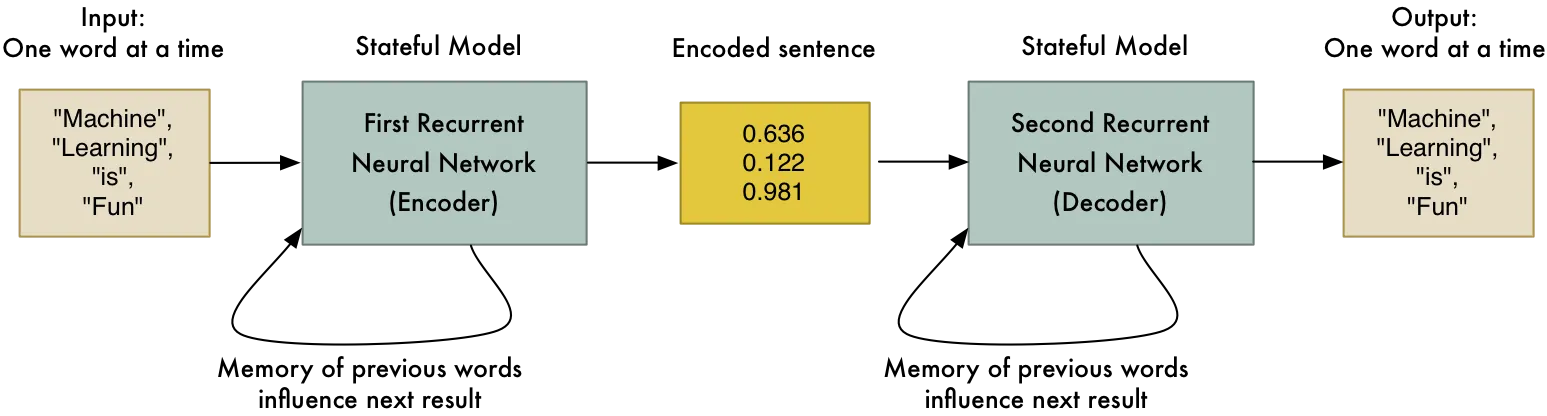

What would happen if we connected two RNNs end to end? The encoding for a sentence could be produced by the first RNN. The original sentence might then be decoded once more by the second RNN using that encoding and the same reasoning applied in reverse:

The ability to encode and then decode the original sentence again is obviously not very useful. However, here's the big idea: what if we could use our parallel corpus training data to train the second RNN to decode the sentence into Spanish rather than English?

And voilà, we now have a general method for translating a list of English words into a corresponding list of Spanish words!

This is an effective concept:

The main constraints for this strategy are the quantity of training data available and the computing power available. This was just developed by machine learning researchers two years ago, but it already outperforms statistical machine translation systems that took twenty years to create.

It is not necessary to understand any grammar rules in order to perform this. These rules are determined by the algorithm on its own. This implies that you don't require professionals to fine-tune each stage of your translation process. That is done for you by the computer.

Almost any type of sequence-to-sequence problem may be solved with this method! As it happens, sequence-to-sequence difficulties make up a large number of fascinating problems. For more awesome things you can do, keep reading!

Please take note that we left off some details that are necessary to make this function with actual data. For instance, handling sentences of varying lengths in the input and output requires more work (see bucketing and padding). There are also problems with accurately interpreting uncommon terms.

Constructing Your Own Translation System from Sequence to Sequence

TensorFlow comes with a functioning demo for translating between English and French if you wish to create your own language translation system. But this isn't for the weak of heart or those on a tight budget. This technology requires a lot of resources and is still quite young. To train your own language translation system, even with a powerful computer and a top-tier video card, you may need to dedicate approximately one month of uninterrupted processing time.

Furthermore, the speed at which sequence-to-sequence language translation algorithms are developing makes it difficult to stay up. Results are greatly improving due to a number of recent advances, however there aren't even any wikipedia pages for them yet. Examples of these developments include adding an attention mechanism and tracking context. You must stay up to date with new advancements as they happen if you intend to pursue sequence-to-sequence learning seriously.

The Preposterous Potential of Sequence-to-Sequence Frameworks

What further uses are there for sequence-to-sequence models?

Google researchers demonstrated over a year ago that AI bots may be constructed using sequence-to-sequence models. The concept is so basic that it's astounding it functions at all.

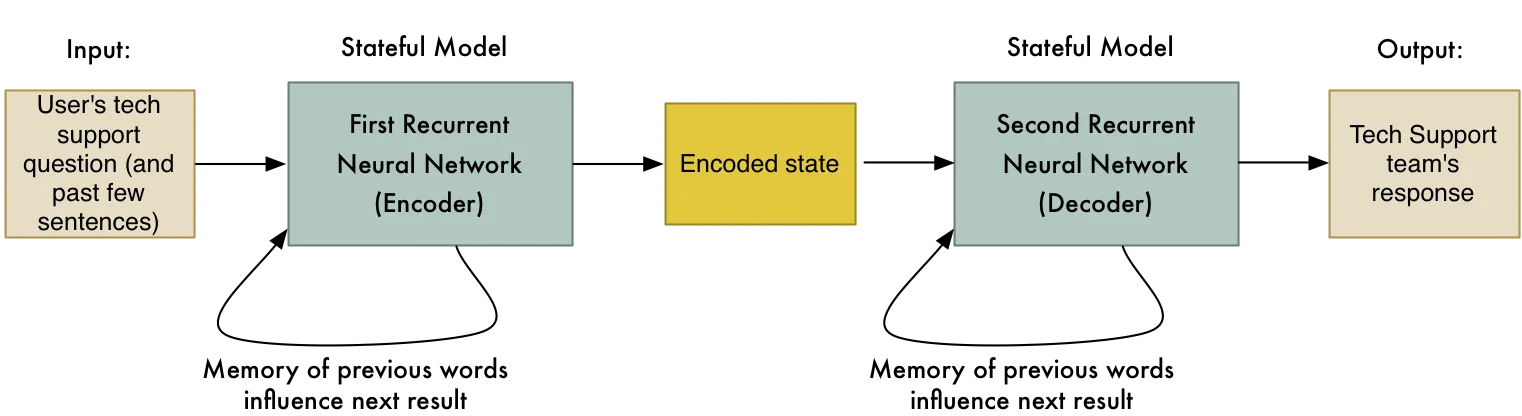

Initially, they recorded conversations between Google staff members and the company's tech support staff. The Tech Support team's response served as the "translation" of the employee's query, which served as the input sentence in the sequence-to-sequence model that was subsequently trained.

In order to receive a response from the bot during user interaction, the user would "translate" each message with the system.

The outcome was a somewhat sophisticated bot that could occasionally respond to legitimate tech help inquiries. An excerpt of a sample user-bot discussion from their paper is provided here:

Human: rejected connection or anything similar

Machine: Could you tell me which version of the network you are connected to?

Human: Could be wrong about that

- Machine: Does it ask for a user certificate when it connects to the network?

- Human: indeed

- Machine: Are you currently at work or at home? workplace?

- Man: I'm at home. Computer: Give it a go. navigate to the <URL redacted> page and provide your login credentials.

- Human: completed

- Machine: see if it's functioning properly now by login in using <NAME redacted>.

- Human: Well, it functions now!

- Fantastic machine. What more can I do to help?

They also tried building a chat bot based on millions of movie subtitles. The idea was to use conversations between movie characters as a way to train a bot to talk like a human. The input sentence is a line of dialog said by one character and the “translation” is what the next character said in response:

The outcomes of this were really intriguing. The robot not only communicated like a human, but it also showed a little intelligence:

Mi nombre es David. ¿Cuál es mi nombre?

David es la máquina.

Humano: mi nombre es John. ¿Cuál es mi nombre?

Maquinaria:

- John. Human: ¿eres un líder o un seguidor?

- Machine: Soy un líder.

- Human: ¿eres seguidor o líder?

- Machine: Soy un líder.

Esto es solo el comienzo de las posibilidades. Nos limitamos a convertir una oración en otra. ¡También es posible crear un modelo de imagen a secuencia que pueda convertir una imagen en texto!

Otro equipo de Google lo hizo al sustituir el primer RNN por una CNN (como aprendimos en el capítulo 3). Esto permite que el ingreso sea una imagen en lugar de una oración. El resto funciona básicamente el

También podemos convertir imágenes en palabras de la misma manera (siempre y cuando tengamos una gran cantidad de datos de capacitación).

Andrej Karpathy expanded on these concepts to create a system capable of describing images in great detail by processing multiple regions of an image separately:

Esto permite la creación de motores de búsqueda de imágenes capaces de encontrar imágenes que se ajustan a preguntas de búsqueda inusuales:

¡Incluso hay investigadores que trabajan en el problema inverso, creando una imagen completa basada en una descripción textual!

Desde estos ejemplos, es posible comenzar an imaginar las posibilidades. So far, sequencing has been used in everything from speech recognition to computer vision. Creo que en el próximo año habrá mucho más.

These are some recommended resources if you want to learn more about sequence-to-sequence models and translation:

El curso de CS224D de Richard Socher sobre redes neurológicas adorables recurrentes para la traducción mecánica (video)

La charla de CS224D de Thang Luong sobre la transacción de la máquina nerviosa (PDF)

La descripción de la modelación de Seq2Seq de TensorFlow

El capítulo sobre el aprendizaje sequencial en el libro de aprendizaje profundo (PDF)

Please consider joining my Machine Learning is Fun! email list if you enjoyed this article. I will only email you when I have something new and amazing to share. La mejor manera de aprender cuando escriba más artículos como este es así.

También puedes seguirme en Twitter en @ageitgey, escribirme por correo electrónico o encontrarme en LinkedIn. Please let me know if I can help you or your team with machine learning.

Continuar con el aprendizaje automático es divertido ahora! ¡Capítulo 6!

Comments

You reported that adequately! web page You have made the point! casino en ligne Appreciate it, Lots of data! casino en ligne You made your position very clearly!. casino en ligne Cheers! I value this! casino en ligne Kudos! I appreciate it! casino en ligne Kudos! I value it. casino en ligne Thanks! Quite a lot of information. casino en ligne You actually suggested it well! casino en ligne You actually reported it fantastically. casino en ligne

2025-05-30 14:05:06